[ad_1]

Nvidia President Jensen Huang holds a Grace Hopper Superchip CPU used for generative AI at a Supermicro keynote presentation during Computex 2023.

Walid Berezgh | LightRocket | getty images

NVIDIA On Monday it unveiled the H200, a graphics processing unit designed for training and deploying the artificial intelligence models that are powering the generative AI boom.

The new GPU is an upgrade from the H100, the chip OpenAI uses to train its most advanced large language model, GPT-4. Big companies, startups, and government agencies are all competing for the limited supply of chips.

According to an estimate by Raymond James, H100 chips cost between $25,000 and $40,000, and require thousands of them to work together to create the largest models in a process called “training.”

Excitement over Nvidia’s AI GPUs has supercharged the company’s stock, which is up more than 230% so far in 2023. Nvidia expects revenue of about $16 billion for its fiscal third quarter, up 170% from a year earlier.

The main improvement with the H200 is that it includes 141GB of next-generation “HBM3” memory that will help the chip “predict”, or use a larger model after it has been trained to generate text, images or predictions. Will do.

Nvidia said the H200 will produce output nearly twice as fast as the H100. It is based on a test experiment of meta Lama 2 LLM.

The H200, which is expected to arrive in the second quarter of 2024, will compete with AMD’s MI300X GPU. of amd The same chip as the H200 has additional memory compared to its predecessors, which helps fit larger models on the hardware for running inference.

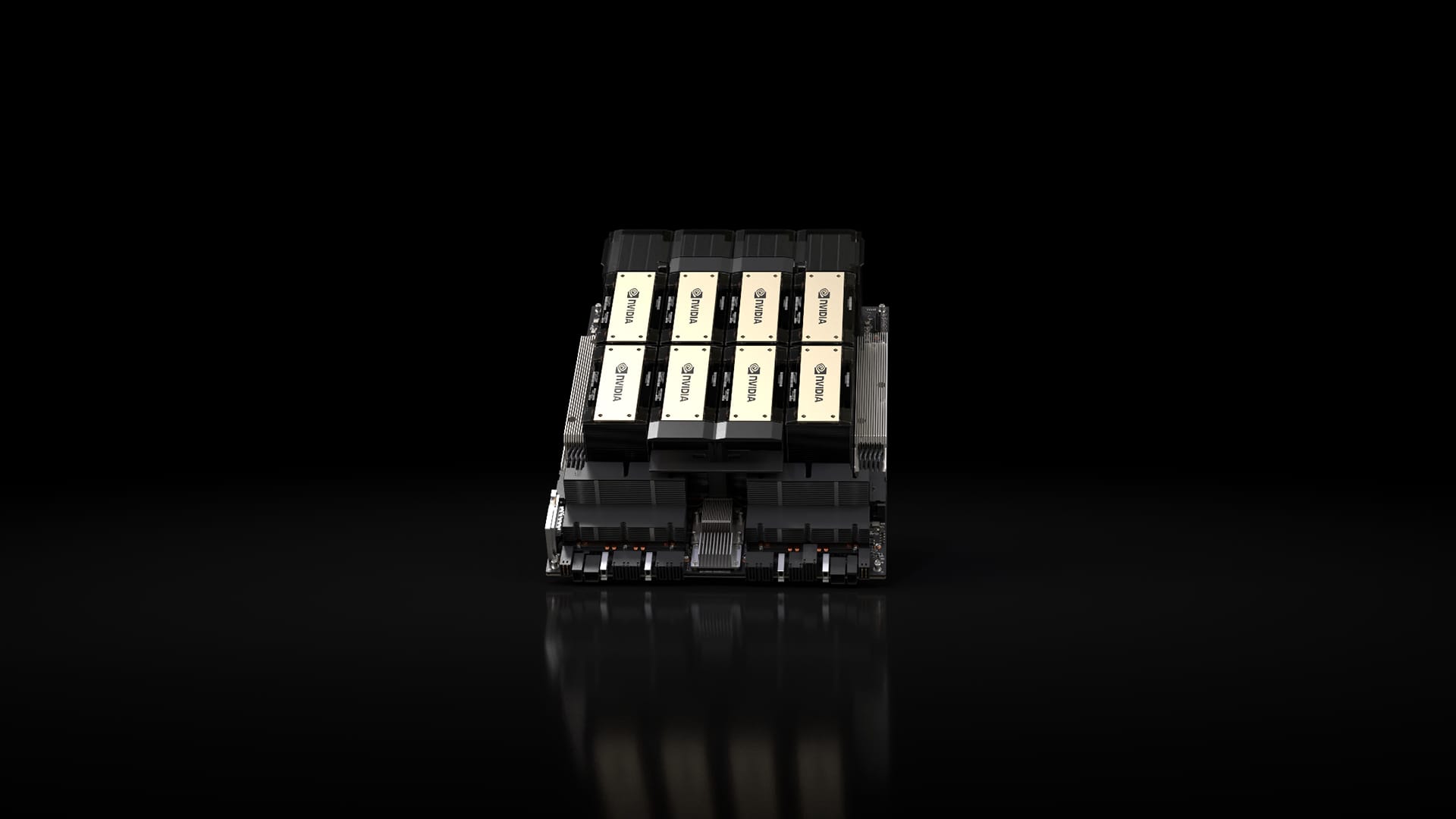

Nvidia H200 chips in an eight-GPU Nvidia HGX system.

NVIDIA

Nvidia said the H200 will be compatible with the H100, meaning AI companies that are already training with the previous model won’t need to change their server systems or software to use the new version.

Nvidia says it will be available on the company’s HGX Complete systems in four-GPU or eight-GPU server configurations, as well as a chip called the GH200, which combines an H200 GPU with an Arm-based processor.

However, the H200 won’t be able to retain the crown of fastest Nvidia AI chip for long.

While companies like Nvidia offer many different configurations of their chips, new semiconductors often take a big step forward every couple of years, when manufacturers move toward a different architecture that does more than add memory or other small optimizations. Unlocks significant performance benefits. Both the H100 and H200 are based on Nvidia’s Hopper architecture.

In October, Nvidia told investors it would shift from a two-year architecture cadence to a one-year release pattern due to high demand for its GPUs. The company displayed a slide suggesting that it will announce and release its B100 chip based on the upcoming Blackwell architecture in 2024.

Watch: We’re big believers in the next year’s AI trends

Don’t miss these stories from CNBC Pro: