[ad_1]

A group of 20 major tech companies on Friday announced a joint commitment to combat AI misinformation in this year’s elections.

The industry is particularly targeting deepfakes, which can use misleading audio, video and images to impersonate key stakeholders in democratic elections or provide false voting information.

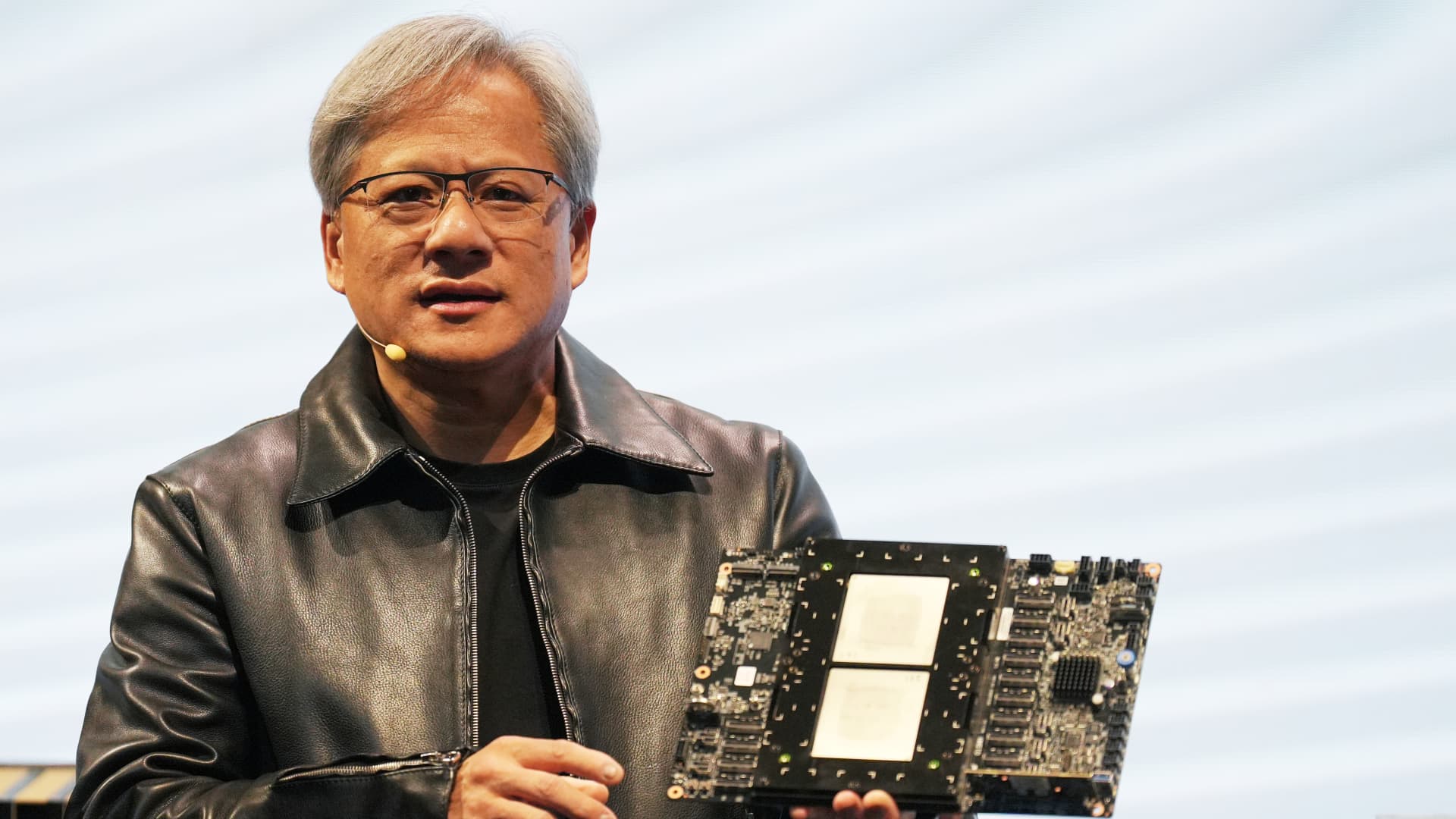

Microsoft, meta, Google, Amazon, IBM, Adobe and chip designer Hand Everyone signed the agreement. Artificial intelligence startups OpenAI, Anthropic and Stability AI also joined the group along with social media companies snapTikTok and X.

Tech platforms are preparing for a big year of elections around the world that will impact more than four billion people in more than 40 countries. The rise of AI-generated content has raised serious election-related misinformation concerns, with the number of deepfakes increasing 900% year over year, according to data from machine learning firm Clarity.

Election misinformation has been a major problem since the 2016 presidential campaign, when Russian actors found cheap and easy ways to spread misinformation on social platforms. Lawmakers today are even more concerned with the rapid rise of AI.

“There is cause for serious concern about how AI could be used in campaigns to mislead voters,” Josh Baker, a Democratic state senator in California, said in an interview. “It’s encouraging to see some companies coming to the table, but right now I don’t see enough details, so we’ll probably need legislation that sets clear standards.”

Meanwhile, the detection and watermarking technologies used to identify deepfakes have not advanced fast enough to keep up. Right now, companies are just agreeing to what will be a set of technical standards and detection mechanisms.

They still have a long way to go, with many layers, to deal with the problem effectively. For example, services claiming to identify AI-generated text, such as Essay, display a bias against non-native English speakers. And it’s not so easy for images and videos.

Even if the platforms behind AI-generated images and videos agree to include things like invisible watermarks and certain types of metadata, there are ways around those protective measures. Screenshotting can sometimes trick the detector as well.

Additionally, the invisible signals that some companies incorporate into AI-generated images have not yet reached many audio and video generators.

News of the deal comes a day after ChatGate maker OpenAI announced Sora, its new model for AI-generated video. Sora works similarly to OpenAI’s image-generation AI tool, DALL-E. A user types in a desired scene and Sora will return a high-definition video clip. Sora can also create video clips inspired by still images, and extend existing videos or fill in missing frames.

The companies participating in the settlement agreed to eight high-level commitments, including assessing model risks, “detecting” and addressing the distribution of such content on their platforms, and providing transparency on those processes to the public. As with most voluntary commitments in the tech industry and beyond, the release specified that the commitments only apply “where they are relevant to the services provided by each company”.

“Democracy rests on safe and secure elections,” Kent Walker, Google’s president of global affairs, said in a release. The settlement reflects the industry’s effort to take down “AI-generated election misinformation that erodes trust,” he said.

Christina Montgomery, IBM’s chief privacy and trust officer, said in the release that in this key election year, “concrete, cooperative measures are needed to protect people and societies from the growing risks of AI-generated deceptive content.”

Watch: OpenAI unveils Sora